This is part one of a series I’ll be writing on grading.

Guskey, Kashtan, and Reeves

On his blog, Douglas Reeves writes:

I know of few educational issues that are more fraught with emotion than grading. Disputes about grading are rarely polite professional disagreements. Superintendents have been fired, teachers have held candle-light vigils, board seats have been contested, and state legislatures have been angrily engaged over such issues as the use of standards-based grading systems, the elimination of the zero on a 100-point scale, and the opportunities for students to re-submit late or inadequate work.

Miki Kashtan, co-founder of Bay Area Nonviolent Communication, succinctly and insightfully explain what’s needed to ground intense conversations in cooperation and goodwill:

Focusing on a shared purpose and on solutions that work for everyone brings attention to what a group has in common and what brings them together. This builds trust in the group, and consequently the urge to protect and defend a particular position diminishes.

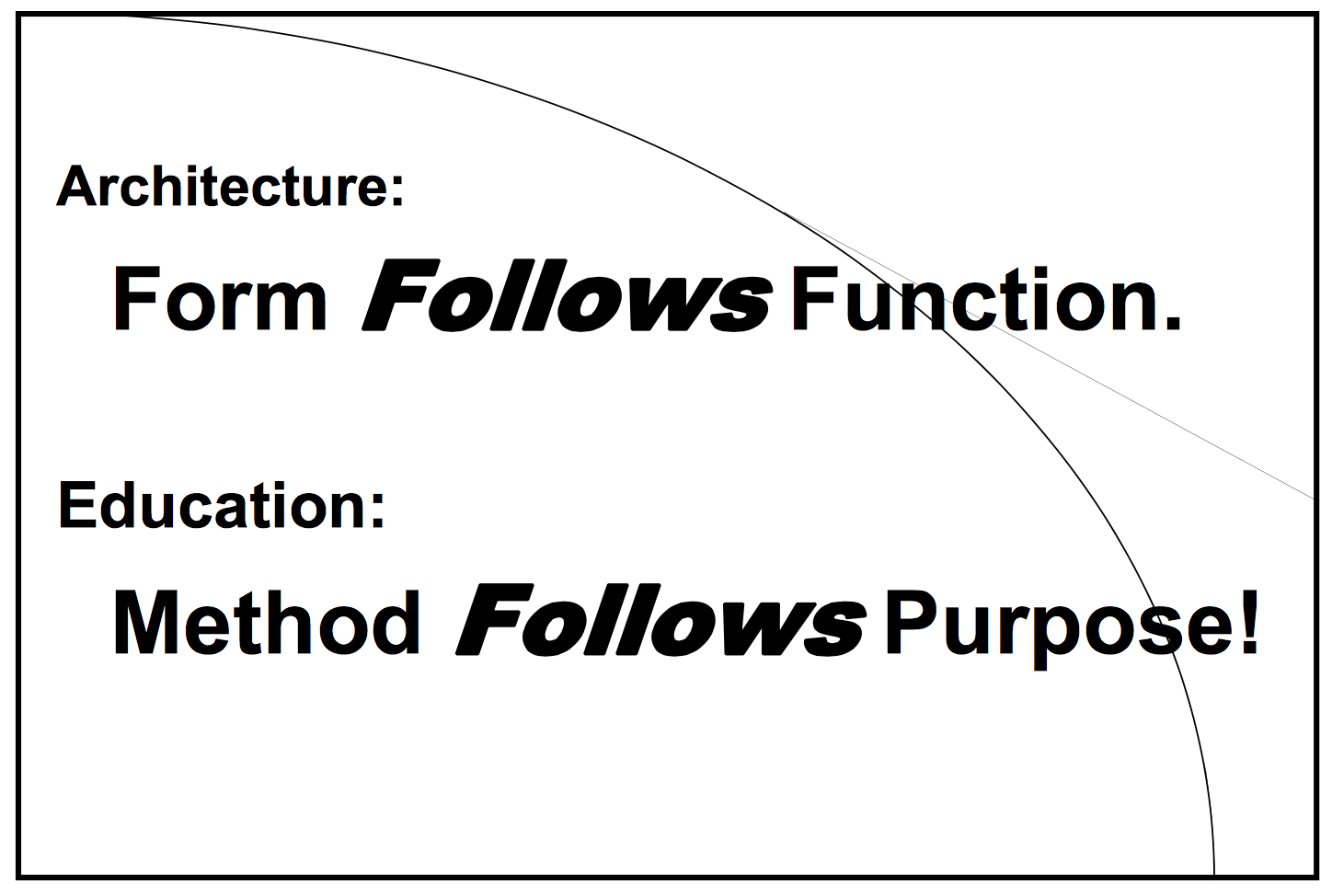

In On Your Mark (Solution Tree, 2014), Thomas Guskey backs up Kashtan and calls upon the work of Jay McTighe and Grant Wiggins on backward design when he writes, “Method follows purpose.” (p. 15)

Guskey continues to emphasize the importance of beginning with the end in mind when we come together to discuss our craft with other educators:

Reform initiatives that set out to improve grading and reporting procedures must begin with comprehensive discussions about the purpose of grades … (p. 21)

Summary

- Discussing grading can quickly become prohibitively emotional. (Reeves)

- Focusing on a shared purpose helps those of us who have already put a stake in the ground to be willing, eager and able to move it. (Kashtan)

- Before considering the “how” of grading, deeply consider the “why.” (Guskey)

A couple questions on my mind

- What practices do you, your department, and/or your institution have in place to facilitate difficult conversations about grading, reporting, and assessment?

- To what extent would it be a useful exercise for each department within a school to produce its own purpose statement for grading? (“The purpose of grades within the ___ department at ____ School is …”)

More to come.